2D Lighting Engine

Overview

Ultra Lights 2D is an advanced real-time 2D lighting engine I built for GameMaker based off a system called Super Fast Soft Shadows. It’s easily one of my proudest achievements in software, and was the project that taught me how to program shaders.

I won’t go into too in-depth on integration details in this article, but will provide some insight into the unique aspects of my system and development process.

Features:

- Full shadow rendering that includes umbra, penumbra, and antumbra

- Provides area lights/shadows for circular light sources

- High dynamic range colors and shadows (16 or 32-bit RGBA)

- Animated blue noise dithering for smoother shadow gradients

- It’s highly optimized. It can run at thousands of frames per second on PCs with dedicated GPUs.

- Most features are possible to integrate into WebGL for use in browsers

Backstory

In 2016, I came across this 2D lighting demo by a YouTuber named Slembcke. I always enjoy seeing what independent developers built, but this one was especially fascinating to me. It seemed to actually simulate area lighting to some degree without major performance costs. At the time, path/ray-tracing and radiosity were the only systems that I knew of which achieved this effect, but only with major drawbacks. Since there was nothing else quite like it, I often wondered how it worked, and if I could ever create something like it. At the time I barely even knew what shaders were.

Fast forward to 2022 and I was interested in lighting systems again. While browsing for images of shadow antumbras for research, an image from this post popped up. I was astonished and ecstatic. It had only been about 6 months since he posted it. The post itself goes in depth on how the shadow system actually works, what the theory is, and also provides a WebGL example for people to try out. Finally, I had plenty of information to build and understand the system.

What do shadows really look like anyway?

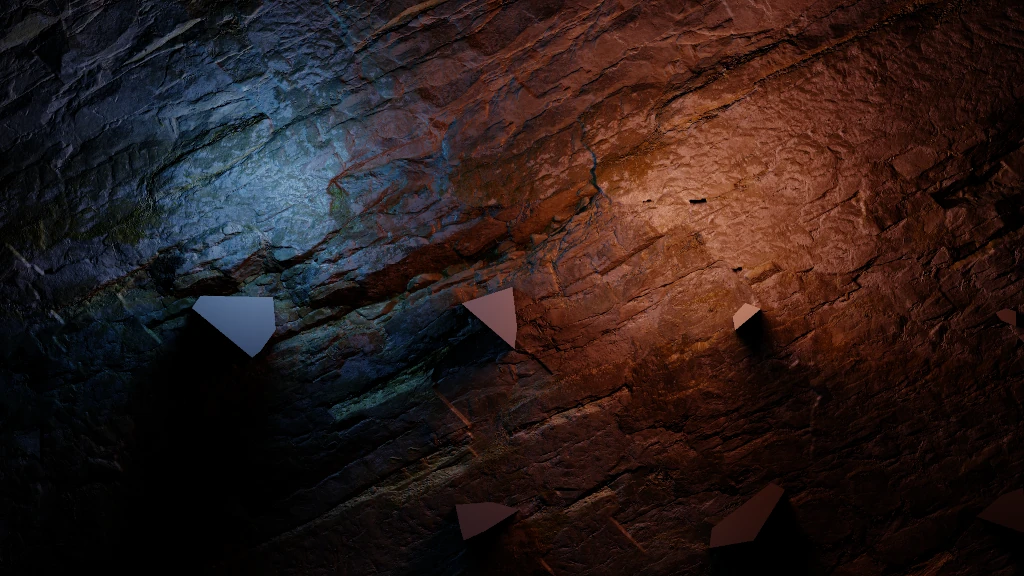

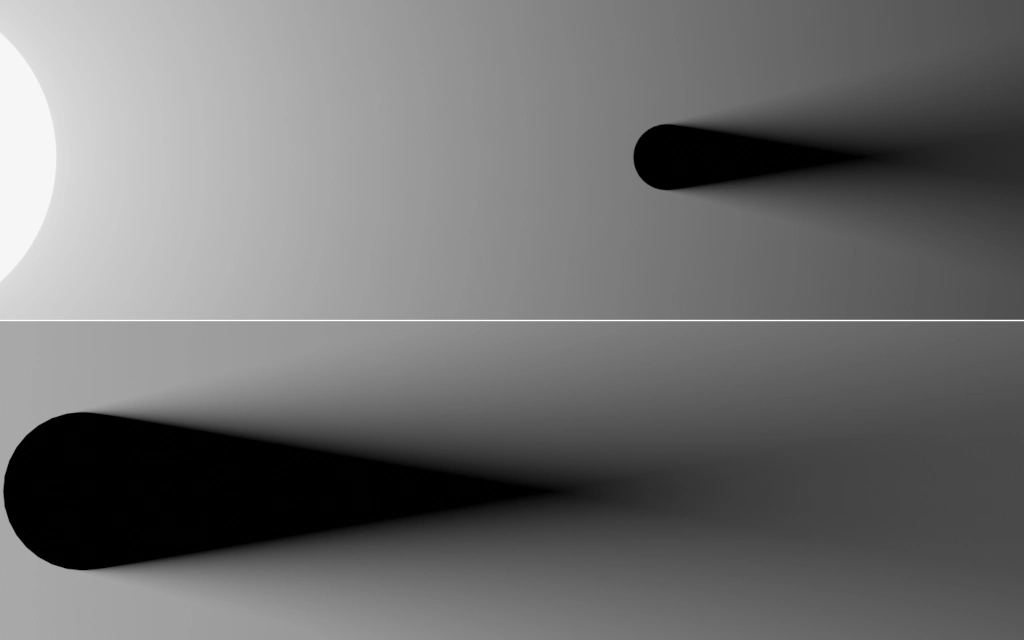

Antumbras are typically not seen in real-time graphics, and it’s not something I usually think about in the real world either. It occurs when light from a single source goes around all sides of an object. If you’ve ever seen a total solar eclipse in person, then you know what it’s like to be in the antumbra of the Moon. So shadows get darker as the light becomes blocked, a region called penumbra. If the entire object is encompassed by the same light source, it will produce antumbra, a region where the shadow gets brighter.

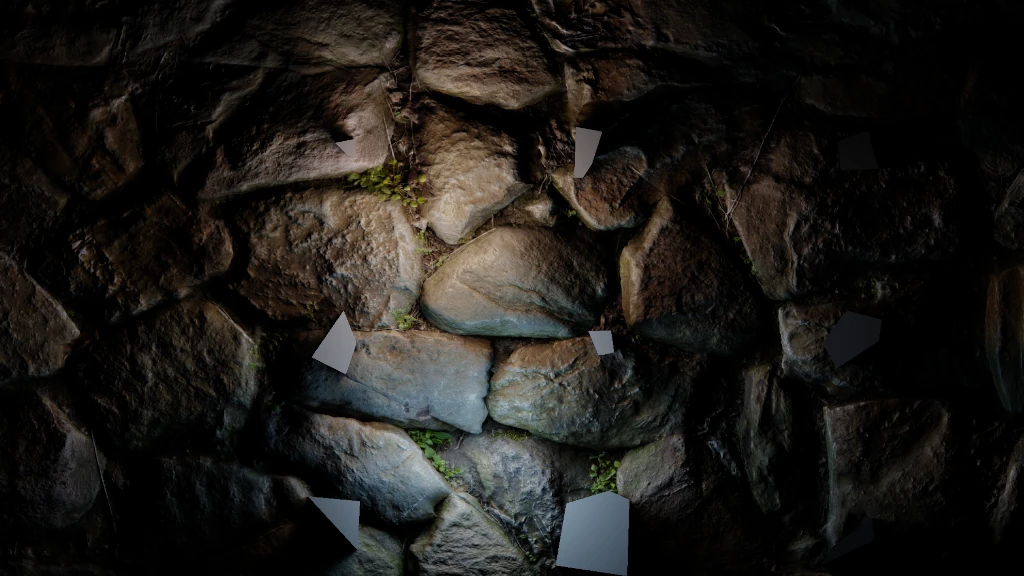

In order to get a good idea of what the phenomenon should look like, I did a few high fidelity path-traced renders in Blender. Only direct illumination is simulated, meaning there is no light bouncing off of surfaces. A simple diffuse surface is used in the background and the light source is kept as short as possible while still producing enough rays to fill the scene. It’s rendered from an orthographic perspective as well. I did a few different variations to get a sense for how different kinds of geometry should cast shadows from large light sources.

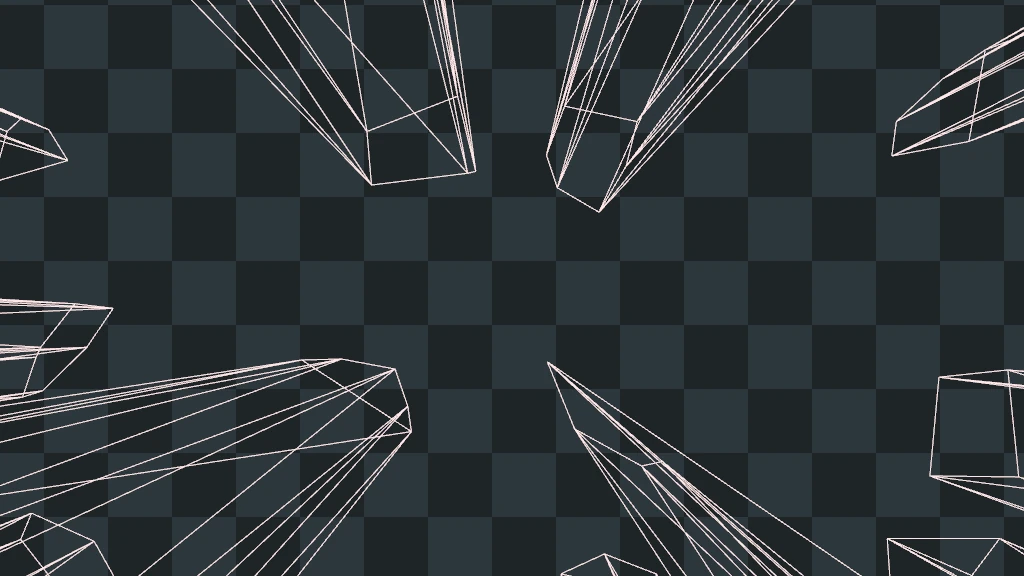

Shadow Geometry

At run-time, a configurable grid of polygons is generated via the gift wrapping algorithm for the world’s geometry. Any polygonal shape can be used for the lighting, but this only produces convex polygons due to a limitation of the physics engine. To project shadows, an index of the vertices of this static geometry is kept in memory.

Once rendering starts, a vertex shader then projects new points from this, extruding them away from a light source out to infinity via homogenous coordinates. A single quad is used for each side of a polygon. The extruded end points of this quad are used to to test how “visible” they are from a particular light source. The pixel shader can then do a straight-forward interpolation between the points, producing a shadow gradient. Unfortunately, this also means there’s a good amount of overdraw as polygons often end up covering one another, but most modern GPUs can power through this.

Dithering

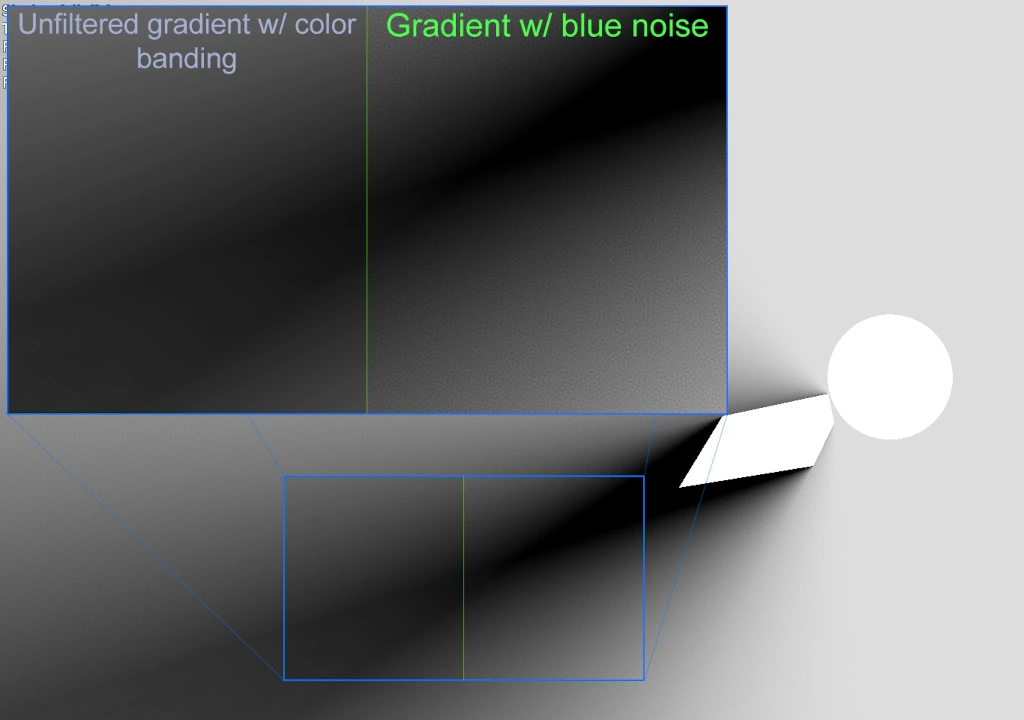

For most of the development cycle, shadows could only be rendered in 8-bit channels (0-255). This creates a perceived lack of detail dubbed “color banding”, which is common in lighting systems. Thankfully, blue noise is a great solution to this issue. The image above shows the effect it can have on an obsolete version of the engine. Much later on this was further alleviated with the introduction of HDR colors and shadowmaps.

Future Plans

I want to continue refining this system for use in future projects. Here are some of the features I plan to implement:

- Multiple quality modes

- Convex polygonal light sources

- Tone mapping/automatic exposure (currently experimental)

- Shadow geometry culling

- Moving occluders